Alive

It was like a sensation when the new Flux model became public. On the first of August, there was the launch of Black Forest Labs. The people who did develop the Stable Diffusion generator at the university, before they did join/found the startup company in the past called Stability AI. Basically, they did surprise everybody with new models and new company. We did evaluate the free access models in the past weeks in detail with the focus on pictures of humans, animals and creatures. There are three models:

FLUX1.1 [pro]

FLUX.1 [dev]

FLUX.1 [schnell]

The Flux dev and the Flux schnell models are free to use and are non-commercial application for private use only. The use of these models in a commercial way is possible, but of course you must pay for it! The Flux pro model can only be accessed via API, or the partners of Black Forest Labs and it costs money to use it. The api partner you can find on their homepage: https://blackforestlabs.ai/#get-flux

Go for ComfyUI

For free, without paying a cent you can check out the Schnell and Dev model via ComfyUI! You can download these models via huggingface for free or direct via ComfyUi and legal. One thousand tutorials on how to set up ComfyUI and how to collaborate with it are available at WWW. Likewise, like this one

Good to know about the Flux model

Power is needed

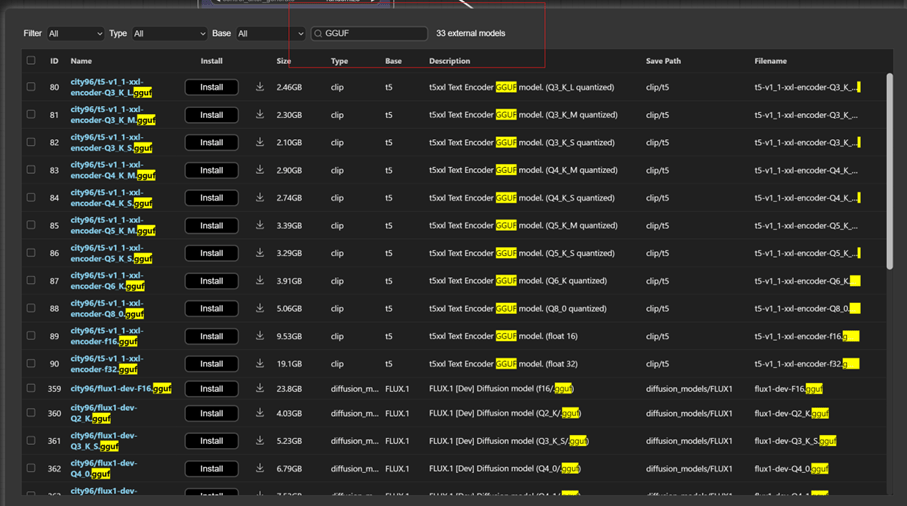

Altogether, the Flux models are like a beast! A lovely beast regarding the quality of the output pictures and a horrible beast regarding the requirements of your computer! Highly recommended is using a 4080 Computer with a minimum of 48G ram with a 16GB graphics card! BAM! People will tell you that there are ways around and minimized models. Altogether, rendering pictures means testing and re-testing and rendering and re-rendering. Just go for it when you do have related computer power! There is the possibility of using the so-called quantized models or GGUF models of Flux. Models for lower VRAM. These models are useable with ComfyUI and as incredibly good alternatives for the Schnell and DEV model! You can download the models inside ComfyUI or via github:

(example for downloading models via ComfyUI)

Doing the first test rendering of the Flux models and you are not new to the Stability AI models you can “feel” and see they are related to each other. The pictures are quite similar, for example the two previewed front teeth when you do render humans. Bytheway just use the word “smile” as prompt or whatever if you don not want to see these teeth again!

Prompting

Still the prompting needs time to figure out. Prompt romans are very appreciated. The models can manage detail information and those prompt quality commands are not need any more like “masterpiece, 8k, UHD, best detail, Ultra-HD, high details, hyper realistic” and and and. Obviously, is negative prompting for any Flux model is not possible anymore. Sometimes wishing it back again. Let us talk about quality a little bit later. All what is known is that the prompting for the three Flux models is not the quite the same. The mechanics behind it are a little bit different as you might know it. Flux and Stability Ai do use a token-based clip text encoder and flux has additional a so called T5 Text to Text transfer transformer. This one uses natural language. The T5 has the higher priority of image generation. Type romans or in detail like with the help of Chatgpt or LLM is a not a bad idea. We did try both. We do use something like a mixing of T5 and token based. This might be related to the prompting style we do use! But you are the artist. It is up to you how you do prompt and what is the best for you and gives you the best results.

Let us talk about the models details

Schnell model

The adjective “Schnell” in German language stands for „fast“. A good phrase for a speed model and it can create amazing pictures. You can even play around with the render steps. The best results we got were with between 6 and 18 steps. It is straighter regarding the position of the camera e.g. prompts like “front view” or side view”. It is not easy to give you any recommendation about with scheduler might be the best. It is a matter of taste and even about your computer system. Start with the Euler scheduler and then you can try another scheduler. This model is perfect for starting with Flux and to get a better feeling about these new models.

(example Flux-Schnell picture!!!)

Pro model

Overall, we do not have any feeling about the Pro model. Surprisingly, Black Forest Labs calls its own Pro model right now to be the best on the market. Because it is not possible to assess it for us for free, we did play around with it at a partner page. It looks like an upgrade of the Dev model! It is very restricted to prompting it. Just even words like “sexy” or “cleavage” forces not rendering. Cause it was not possible for us to evaluate it for weeks and get needed practice, cause of the price -5cents- per image, we are not able to give you any information about it.

Dev model

However, we love the impressive Dev model!! With a short natural or not natural language prompt, you get amazing results. The quality is outstanding! The quality of humans you can render is that much better with any other model we do know! Hands and fingers are of a much better quality than we used to have in the past. Bytheway –with the Schnell model you can get amazing body and body parts results when rendering humans – sometimes! Even with a 4080 computer it needs more time to render the Dev model. Rendering between 25 and 50 steps takes a while especially when you do use special settings with ComfyUi to increase the picture quality! Switching between models can take ages, because the loading of the 23gb DEV and 23gb Schnell checkpoint takes time! The Controlnet functions and the usage of Lora` extends the time to render a single picture!

(example Flux-Dev picture)

Impression

Status

Obviously, there are already features out. Features like Lora`s, Controlnet function and workflows for ComfyUI with Flux models. You can pay for it with any api page of Black Forest Labs, it is just one or two clicks to change the model and there you can use the standard Lora`s and standard Controlnet function! Well, whatever the partner page does offer. Using Flux model with ComfyUi gives you many more options, being more flexible and it is much easier to test e.g. like the new prompting or with the pixel-size of the picture and the quality – you don’t stick to the settings and offering of the api page! The recommendation is to use the Flux models with a square size like 1024×1024. We evaluated many formats, with incredibly positive results with landscape size formats. Without portrait pictures, the results with vertical formats did not work that good for us vs. landscape format.

Did you know?

Basically, the best thing about all Flux-Models is the fact that inpainting and spending hours on inpainting is not needed that often anymore. Sometimes it even works when the prompt is a little bit manipulated. E.g., you want a red shirt and not a blue shirt for a figure on a picture. Then just try do re-render with using the prompt “red”. This can even work with posing and other details. It looks like the rendering works modular. The most parts of the picture will stay unchanged when you for example change the position of hand with prompts. This is not a feature, but it is immensely helpful and something we do not know from any other model!

In conclusion, all three models do offer the ability to label text and signs with text in a picture. This feature is getting increasingly popular, and you can do funny things with it!

Costs for you

Finally, we do think Black Forest Labs did bring the AI theme to new standards with their model quality! Right now, their models are benchmarks! Using flux model’s pictures with AI video generators gives amazing results and does work perfectly! That is not a surprise because the video quality is related to the picture quality of every single picture. It is positive that the new models do set new standards for the quality of AI pictures and even AI videos. How it is writing on the Black Forest Labs homepage is the next focus for them is on AI videos!

Issues

Issues with body parts and rendering faults are still there. Details problems with Rendering fingers are much better, but far away from being perfect; even with every other body-parts in distance to the viewer. Portrait style pictured gives the best results. Like with every other model out there. Sometime there is really the wish to have something like a negative prompt. E.g. lots of pictures with humans do get jewelry when you do render the Flux model. Trying to rewrite a negative prompt into a positive prompt like “without necklace” does not work at all even not prompts like “she hates jewelry”. I hope they stick to working on the new model version and we do honestly hope testing or playing around with it for free like with ComfyUi will be possible again!

Open Wallet

Creating a Lora (Low Rank Adaptation = a way to adapt a large machine learning model for specific uses without retraining the entire model) can help to get good, rendered pictures. For the Flux models you will need high RAM and VRAM. It is very time consuming creating a Lora for Flux and it takes longer to run the rendering picture process. There is the offer to create Lora`s with the api pages. To create one turn of Lora rendering costs 2€/$! If you create your Lora`s with sixteen turns like with the “Kohya” method, it is getting expensive!

Example pricelists for rendering one picture of any Flux model on api pages are about:

Dev models 0.025

Schnell 0.003

Pro 0.05

These prices are common and the number of internet pages offering these services is exploding. You might think now that it is cheap. To be honest – it is not cheap at all! Until you do have the pictures rendered how you do like it, it takes time and time. Rendering and re-prompting again and again. Moreover, with Stability Ai you had one good picture out of one hundred. With Flux you have a better rate. Let us say 5 or 10 out of 100! It costs you 50cents per Dev model and picture with this ratio, for instance. We tried to talk with the people of Black Forest Labs. via email about it but did not get any positive feedback regarding the ratio of bad results and even paying for it!

The future is bright

The company Black Forest Labs focus on paying api pages offering their services. Not to mention they will be interested in seeing their models improve with the help of people who do render images That is why they do stick to api pages – to get a collected feedback and money! We do understand they need to earn money with their models, and it is logical they do have Freepik as partner now. Without any detailed information it would not be surprising to find their models in any Painting software like Adobe at near future. If you are a user and used to render between 50k up to 150k of pictures per month – well than I guess you will not use the api or partner pages for the Flux models! Again, we did ask via email about the costs for it. The answer was that we do have to pay 5k per month with this amount of rendering and we can get our own api link for it! The answer was that we do have to pay 5k per month with this amount of rendering and we can get our own api link for it!

Stay tuned. The market is turning fast and the Nvidia Sana model with LLM prompts is close becoming public! Even a “monster” model is close to get public called Redpanda/RecraftV3! The model shall be better than all the flux models are rumors announcing!

Happy AI generation!